Digitization, Preservation and Ingest: Difference between revisions

(Updated) |

No edit summary |

||

| Line 115: | Line 115: | ||

=== Specifying a Naming Convention for Digitized Files === | |||

For a digital file intended for archiving and preservation, a name is not just a name. It is also a very important descriptor of that particular item, which should contain information that allows us to identify what the item is and what it contains so we can locate it in the archive and properly manage and preserve it. Therefore, an important element of specifications for the process of digitization is development and application of a consistent set of rules, a so-called “naming convention” for digital surrogates we create from physical items. | For a digital file intended for archiving and preservation, a name is not just a name. It is also a very important descriptor of that particular item, which should contain information that allows us to identify what the item is and what it contains so we can locate it in the archive and properly manage and preserve it. Therefore, an important element of specifications for the process of digitization is development and application of a consistent set of rules, a so-called “naming convention” for digital surrogates we create from physical items. | ||

| Line 137: | Line 137: | ||

=== Specifying File Formats and Quality === | |||

In addition to the file name of a digital surrogate, its digital format and the standard of quality to which it will be digitized also need to be specified before the process can begin in earnest. | In addition to the file name of a digital surrogate, its digital format and the standard of quality to which it will be digitized also need to be specified before the process can begin in earnest. | ||

| Line 172: | Line 172: | ||

=== Specifying Quality Standard(s) for Digitized Files === | |||

An important element of the specifications for the digitization process is the standard of quality to which we want and need to digitize our physical items. This is usually referred to as the “resolution” of a digitized document, photograph, or video. A higher resolution of a digital surrogate will allow for a better user experience and wider possibilities for its use—and overall a better copy of its original than a lower-resolution file. However, higher resolution also means that the digital surrogate will have a bigger digital size and will therefore take up more space in our storage media. | An important element of the specifications for the digitization process is the standard of quality to which we want and need to digitize our physical items. This is usually referred to as the “resolution” of a digitized document, photograph, or video. A higher resolution of a digital surrogate will allow for a better user experience and wider possibilities for its use—and overall a better copy of its original than a lower-resolution file. However, higher resolution also means that the digital surrogate will have a bigger digital size and will therefore take up more space in our storage media. | ||

| Line 208: | Line 208: | ||

=== Metadata: Descriptions of Digitized Files === | |||

In the section dealing with the planning and organization of a digital archive, we discussed the important process of describing the archival material on several of its relevant attributes and creating a connection between those descriptions and the material by recording them in a table. This is necessary, as it allows us to later search for, locate, and identify items and item groups based on those descriptions, as well as to properly manage, preserve, and use the archival material. The same principle applies for the digital surrogates. | In the section dealing with the planning and organization of a digital archive, we discussed the important process of describing the archival material on several of its relevant attributes and creating a connection between those descriptions and the material by recording them in a table. This is necessary, as it allows us to later search for, locate, and identify items and item groups based on those descriptions, as well as to properly manage, preserve, and use the archival material. The same principle applies for the digital surrogates. | ||

| Line 247: | Line 247: | ||

Making our metadata interoperable will save us significant time and resources (as well as headaches) later in the process, not least in the next step when we need to ingest and make operable that metadata, along with the digital surrogate files to which it is linked, in our Digital Archiving System. These issues related to the processing of digital files and their metadata will be discussed in more detail in the upcoming section, where we look at how our entire material—both digitized and born-digital—needs to be prepared for ingest into our Digital Archiving System. | Making our metadata interoperable will save us significant time and resources (as well as headaches) later in the process, not least in the next step when we need to ingest and make operable that metadata, along with the digital surrogate files to which it is linked, in our Digital Archiving System. These issues related to the processing of digital files and their metadata will be discussed in more detail in the upcoming section, where we look at how our entire material—both digitized and born-digital—needs to be prepared for ingest into our Digital Archiving System. | ||

=== Selection, Set-Up and Testing of Equipment: Software, Hardware, and Storage Media === | |||

This manual cannot provide recommendations on specific digitization equipment, software, or storage media, or how it should be set up and optimized. Such advice would necessarily be too generic for requirements of any concrete project, and it would also be likely to quickly become obsolete. | This manual cannot provide recommendations on specific digitization equipment, software, or storage media, or how it should be set up and optimized. Such advice would necessarily be too generic for requirements of any concrete project, and it would also be likely to quickly become obsolete. | ||

| Line 272: | Line 272: | ||

This especially true given that the set-up and its fine-tuning are not a one-off activity, as the process requires repeated testing and iterative changes before the required result is achieved. The testing process should include a sample of different groups of materials and involve the entire process of an item’s digitization (i.e., the digitization workflow). | This especially true given that the set-up and its fine-tuning are not a one-off activity, as the process requires repeated testing and iterative changes before the required result is achieved. The testing process should include a sample of different groups of materials and involve the entire process of an item’s digitization (i.e., the digitization workflow). | ||

=== Implementation: Digitization Workflow === | |||

The final stage of digitization is implementation of all the different elements that we have been planning, deciding on, and devising in the previous stages. Digitization is a complex process, but if all of its parts and functions are planned and designed well and in advance, its implementation will be streamlined and fruitful. | The final stage of digitization is implementation of all the different elements that we have been planning, deciding on, and devising in the previous stages. Digitization is a complex process, but if all of its parts and functions are planned and designed well and in advance, its implementation will be streamlined and fruitful. | ||

| Line 280: | Line 280: | ||

Each digitization project will have its own unique workflow and specific sequence of digitization actions and operations. Further, some activities, such as quality control, will be repeated at different stages of the process, while others will be executed simultaneously or in parallel. Although specific actions and their sequence are tailored to each concrete project, we can identify the key elements required in any digitization workflow: preparations, process scheduling, digitization, quality control, post-processing, and storage and backup. | Each digitization project will have its own unique workflow and specific sequence of digitization actions and operations. Further, some activities, such as quality control, will be repeated at different stages of the process, while others will be executed simultaneously or in parallel. Although specific actions and their sequence are tailored to each concrete project, we can identify the key elements required in any digitization workflow: preparations, process scheduling, digitization, quality control, post-processing, and storage and backup. | ||

=== Preparation of Material, Protocols, and Workspace === | |||

The digitization process begins in earnest with ensuring a clean and appropriate workspace, allowing enough area for work with physical materials as well as for the digitizing equipment and a computer. Assuming that fragile or otherwise compromised material has already been removed, we can proceed to cleaning our physical material and removing any added items, such as paper clips or staples on documents. | The digitization process begins in earnest with ensuring a clean and appropriate workspace, allowing enough area for work with physical materials as well as for the digitizing equipment and a computer. Assuming that fragile or otherwise compromised material has already been removed, we can proceed to cleaning our physical material and removing any added items, such as paper clips or staples on documents. | ||

| Line 286: | Line 286: | ||

Information and relevant digitization specifications about file naming, file resolution, and format, plus any metadata to be recorded should be on hand and well-organized. | Information and relevant digitization specifications about file naming, file resolution, and format, plus any metadata to be recorded should be on hand and well-organized. | ||

=== Process Scheduling === | |||

As part of the workflow, it is essential to clearly schedule the entire process—that is, to determine, document, and then strictly apply an exact sequence of operations to be performed during the digitization process. The scheduling should include buffer time for unexpected events. | As part of the workflow, it is essential to clearly schedule the entire process—that is, to determine, document, and then strictly apply an exact sequence of operations to be performed during the digitization process. The scheduling should include buffer time for unexpected events. | ||

| Line 303: | Line 303: | ||

Regardless of the differences, a good practice at the start of each digitization session is to digitize a reference item (document, photograph, short sample audio or video) with the result reviewed against specifications as a form of ad hoc quality control. In case of any discrepancy from the digitization specifications, equipment can be checked and its set-up fine-tuned. This will help avoid wasting entire sessions of work due to equipment or set-up issues. | Regardless of the differences, a good practice at the start of each digitization session is to digitize a reference item (document, photograph, short sample audio or video) with the result reviewed against specifications as a form of ad hoc quality control. In case of any discrepancy from the digitization specifications, equipment can be checked and its set-up fine-tuned. This will help avoid wasting entire sessions of work due to equipment or set-up issues. | ||

=== Post-processing === | |||

Post-digitization processing of digital surrogates includes making slight corrections to a file to adjust it to a certain standard or specific project specification. This could include actions such as increasing the sharpness of sound in a video file or brightness of an image on a document. | Post-digitization processing of digital surrogates includes making slight corrections to a file to adjust it to a certain standard or specific project specification. This could include actions such as increasing the sharpness of sound in a video file or brightness of an image on a document. | ||

| Line 310: | Line 310: | ||

=== Quality Review === | |||

There are two elements to digitization quality control, and both can and should be implemented at multiple points in the process scheduling (i.e., both during and after digitization, as well as at regular intervals over the course of the project). The first element relates to ensuring that all physical items intended for digitization have indeed been digitized. This can be done automatically by comparing the two sets of data for physical items and their surrogates; however, this should also be accompanied by a sample manual check to ensure that digital surrogates properly correspond to their physical originals. | There are two elements to digitization quality control, and both can and should be implemented at multiple points in the process scheduling (i.e., both during and after digitization, as well as at regular intervals over the course of the project). The first element relates to ensuring that all physical items intended for digitization have indeed been digitized. This can be done automatically by comparing the two sets of data for physical items and their surrogates; however, this should also be accompanied by a sample manual check to ensure that digital surrogates properly correspond to their physical originals. | ||

| Line 318: | Line 318: | ||

=== Storing Digitization Products === | |||

At the end of the process, we need to temporarily store the products of digitization on one or more storage media until they are prepared and ingested into a digital archival system. The end result of the process should be one or more digital surrogates of the original, which are often referred to as “master files.” These are stored in a file directory structure created for this purpose. | At the end of the process, we need to temporarily store the products of digitization on one or more storage media until they are prepared and ingested into a digital archival system. The end result of the process should be one or more digital surrogates of the original, which are often referred to as “master files.” These are stored in a file directory structure created for this purpose. | ||

| Line 343: | Line 343: | ||

=== What Is Metadata and Data Documentation? === | |||

Metadata is data—information about data, about the digital archival content. It is stored in a structured form suitable for software processing. Metadata is essentially equal to archival descriptions of digital content. Indeed, the descriptions of our content that we made in the previous stage will now, in the Digital Archiving System, become metadata, thereby adding to other types of metadata such as system-generated technical metadata or metadata on an item’s access history. Metadata is therefore necessary for the goals of long-term preservation and access, as it allows us to maintain the integrity, quality, and usability of content. | Metadata is data—information about data, about the digital archival content. It is stored in a structured form suitable for software processing. Metadata is essentially equal to archival descriptions of digital content. Indeed, the descriptions of our content that we made in the previous stage will now, in the Digital Archiving System, become metadata, thereby adding to other types of metadata such as system-generated technical metadata or metadata on an item’s access history. Metadata is therefore necessary for the goals of long-term preservation and access, as it allows us to maintain the integrity, quality, and usability of content. | ||

| Line 350: | Line 350: | ||

=== Preparing Metadata and Data Documentation === | |||

While our digital files are safely stored and backed up on storage media awaiting ingest and archiving in the digital information system, we need to turn our attention to some housekeeping duties. They involve preparing our metadata and data documentation for the upcoming process to ensure the smooth ingest and proper archiving of files. | While our digital files are safely stored and backed up on storage media awaiting ingest and archiving in the digital information system, we need to turn our attention to some housekeeping duties. They involve preparing our metadata and data documentation for the upcoming process to ensure the smooth ingest and proper archiving of files. | ||

| Line 376: | Line 376: | ||

Yet, regardless of these specifics, we will always need to have a clear overview, a map, or a scheme of our metadata and data documentation and how they are related before we can begin with the ingest. | Yet, regardless of these specifics, we will always need to have a clear overview, a map, or a scheme of our metadata and data documentation and how they are related before we can begin with the ingest. | ||

=== Preservation and Preparation of Data for Archiving === | |||

We can now move on to the preservation actions and preparation of our digital data for ingest and archiving. | We can now move on to the preservation actions and preparation of our digital data for ingest and archiving. | ||

=== Cleaning === | |||

The first thing we should always do before working with digital data intended for preservation is perform an antivirus scan by connecting the storage media to a previously scanned computer that is not connected to any local network or internet. | The first thing we should always do before working with digital data intended for preservation is perform an antivirus scan by connecting the storage media to a previously scanned computer that is not connected to any local network or internet. | ||

=== Backup === | |||

Then comes the backup. At the end of the digitization process, we already created backups of digital surrogates’ master files. If we have not yet done the same for the born-digital data, we should create their backups now by producing two copies and storing them on separate storage media, if possible at two different locations. | Then comes the backup. At the end of the digitization process, we already created backups of digital surrogates’ master files. If we have not yet done the same for the born-digital data, we should create their backups now by producing two copies and storing them on separate storage media, if possible at two different locations. | ||

=== File Naming === | |||

While our digital surrogates’ files have already been named in line with the naming convention we developed and adopted, our born-digital files might still have their original names. We must therefore apply our naming convention to the born-digital files and name them accordingly. Their names will then contain the same components—identification, description, technical, or other—as those we selected and used for the digital surrogates in a way it was described in the digitization chapter. There are reasonably simple and easy-to-use software tools that can perform this task of renaming our digital files automatically within the parameters we set for it, such as “Rename Master” and “File Renamer Basic.” | While our digital surrogates’ files have already been named in line with the naming convention we developed and adopted, our born-digital files might still have their original names. We must therefore apply our naming convention to the born-digital files and name them accordingly. Their names will then contain the same components—identification, description, technical, or other—as those we selected and used for the digital surrogates in a way it was described in the digitization chapter. There are reasonably simple and easy-to-use software tools that can perform this task of renaming our digital files automatically within the parameters we set for it, such as “Rename Master” and “File Renamer Basic.” | ||

=== Metadata === | |||

In the previous section, we took stock of metadata and data documentation we collected thus far in the process. As explained there, we will need to ingest our metadata in a specific, fixed format that is recognizable by our Digital Archiving System. This specific format of metadata will be based on the metadata standard we selected to implement earlier in the process, and that we now need to apply for ingest of data into our Digital Archiving System. | In the previous section, we took stock of metadata and data documentation we collected thus far in the process. As explained there, we will need to ingest our metadata in a specific, fixed format that is recognizable by our Digital Archiving System. This specific format of metadata will be based on the metadata standard we selected to implement earlier in the process, and that we now need to apply for ingest of data into our Digital Archiving System. | ||

| Line 398: | Line 398: | ||

If, as advised in this manual, in the planning phase, we have already made a decision on the standard we will apply for metadata collection and implemented it through description and digitization phases, then our metadata will already have been gathered in accordance with that standard. Therefore, we should be able to arrange and prepare it for ingest in accordance with the system-recognizable format by making only basic technical arrangements or mapping our metadata to the standard. For example, in the digitization section, we mentioned that the so-called “Dublin Core” basic metadata standard is supported by most digital archiving software. Hence, if we applied this standard for the collection of metadata from the beginning, and we selected the software that supports it, we would now be able to translate the collected metadata into the format our Digital Archiving System can recognize and properly ingest. | If, as advised in this manual, in the planning phase, we have already made a decision on the standard we will apply for metadata collection and implemented it through description and digitization phases, then our metadata will already have been gathered in accordance with that standard. Therefore, we should be able to arrange and prepare it for ingest in accordance with the system-recognizable format by making only basic technical arrangements or mapping our metadata to the standard. For example, in the digitization section, we mentioned that the so-called “Dublin Core” basic metadata standard is supported by most digital archiving software. Hence, if we applied this standard for the collection of metadata from the beginning, and we selected the software that supports it, we would now be able to translate the collected metadata into the format our Digital Archiving System can recognize and properly ingest. | ||

=== Preservation of Metadata === | |||

In the earlier discussion of metadata and the importance of its proper collection and management, we mentioned the key role it has for long-term preservation of digital archival data. | In the earlier discussion of metadata and the importance of its proper collection and management, we mentioned the key role it has for long-term preservation of digital archival data. | ||

| Line 404: | Line 404: | ||

This becomes even more salient and relevant at this point in the process, with the preparation for ingest and long-term preservation of our material. This is because, before we ingest and archive our data, we need to make sure that we capture the necessary metadata, which will allow our digital material to be adequately preserved, its authenticity maintained, and it remaining usable in the future. To understand which essential set of metadata we need to capture to preserve our invaluable data, we will need to get to know our digital files and their formats a bit better, including things such as our files’ validity, quality, and fixity. | This becomes even more salient and relevant at this point in the process, with the preparation for ingest and long-term preservation of our material. This is because, before we ingest and archive our data, we need to make sure that we capture the necessary metadata, which will allow our digital material to be adequately preserved, its authenticity maintained, and it remaining usable in the future. To understand which essential set of metadata we need to capture to preserve our invaluable data, we will need to get to know our digital files and their formats a bit better, including things such as our files’ validity, quality, and fixity. | ||

=== Identifying and Converting File Formats === | |||

Back in the digitization process, we established the need to store our digital material in file formats that are appropriate for long-term preservation. Primarily, these are formats that have a wide user/support community and are proven to be resilient to change over time. This is also why they are often called “lossless” as opposed to “lossy” formats that do tend to lose quality and/or change and degrade over time. | Back in the digitization process, we established the need to store our digital material in file formats that are appropriate for long-term preservation. Primarily, these are formats that have a wide user/support community and are proven to be resilient to change over time. This is also why they are often called “lossless” as opposed to “lossy” formats that do tend to lose quality and/or change and degrade over time. | ||

| Line 421: | Line 421: | ||

=== Validating Files === | |||

The next step in preparing our digital content for proper preservation in the Digital Archiving System is validation of our files—that is, establishing that they really are what we think they are. | The next step in preparing our digital content for proper preservation in the Digital Archiving System is validation of our files—that is, establishing that they really are what we think they are. | ||

| Line 440: | Line 440: | ||

=== Fixity === | |||

Fixity, a crucial element of the long-term preservation of files as well as in maintaining their integrity, authenticity, and usability, means a state of being unchanged or permanent. In essence, fixity allows us to determine whether a file has been altered or corrupted over time and to track and record any such changes. | Fixity, a crucial element of the long-term preservation of files as well as in maintaining their integrity, authenticity, and usability, means a state of being unchanged or permanent. In essence, fixity allows us to determine whether a file has been altered or corrupted over time and to track and record any such changes. | ||

| Line 450: | Line 450: | ||

Further, fixity will allow us to verify that any copies of a file we create for backup are complete and correct. Fixity checksum can also be given to other potential file users so they are able to verify that they have received the correct file. There is a range of software that can perform fixity, such as “Checksum” and “Exact.File,” just to name a few. | Further, fixity will allow us to verify that any copies of a file we create for backup are complete and correct. Fixity checksum can also be given to other potential file users so they are able to verify that they have received the correct file. There is a range of software that can perform fixity, such as “Checksum” and “Exact.File,” just to name a few. | ||

=== Quality Control === | |||

Many things can go wrong with digital files as they are created, managed, and stored before they reach the point of ingest. During digitization, due to an error or a virus, files can be damaged, made incomplete, or reduced in quality. It is therefore a good practice to perform as comprehensive a quality check of all our digital files as possible before their ingest and archiving. There is a whole set of tools that perform either specific or a group of quality control actions. Some examples include NARA’s File Analyzer and Metadata Harvester, which has a range of functions, or, on the other side of the spectrum, a highly designed “Fingerdet,” which helps detect fingerprints on digitized items. | Many things can go wrong with digital files as they are created, managed, and stored before they reach the point of ingest. During digitization, due to an error or a virus, files can be damaged, made incomplete, or reduced in quality. It is therefore a good practice to perform as comprehensive a quality check of all our digital files as possible before their ingest and archiving. There is a whole set of tools that perform either specific or a group of quality control actions. Some examples include NARA’s File Analyzer and Metadata Harvester, which has a range of functions, or, on the other side of the spectrum, a highly designed “Fingerdet,” which helps detect fingerprints on digitized items. | ||

=== Removing Duplicates and Weeding Files === | |||

While we are at it, we should use this opportunity to clean up our files a bit. Over the course of collecting, organizing, copying, and temporarily storing our digital files, it is likely that we will have created duplicates, or that folders contain hidden files or files that do not belong in them. Having duplicates and other unwanted files in our collection can create confusion, in addition to unnecessarily taking up space in our storage. It is therefore a good practice to remove them before ingest. Depending on the size of the collection, this could be a very time-consuming and error-prone task if performed manually. Luckily, there are software tools that can do this for us efficiently and reliably. Examples of dedicated tools for this purpose include “FolderMatch” and “CloneSpy.” | While we are at it, we should use this opportunity to clean up our files a bit. Over the course of collecting, organizing, copying, and temporarily storing our digital files, it is likely that we will have created duplicates, or that folders contain hidden files or files that do not belong in them. Having duplicates and other unwanted files in our collection can create confusion, in addition to unnecessarily taking up space in our storage. It is therefore a good practice to remove them before ingest. Depending on the size of the collection, this could be a very time-consuming and error-prone task if performed manually. Luckily, there are software tools that can do this for us efficiently and reliably. Examples of dedicated tools for this purpose include “FolderMatch” and “CloneSpy.” | ||

=== Metadata on Private, Sensitive, Confidential, or Copyrighted Data === | |||

Given the importance of data safety and security when archiving material related to human rights violations, it is highly advisable that, at this point, before the content is ingested, we make an additional review of the material with respect to privacy, sensitivity, confidentiality, and copyrights. | Given the importance of data safety and security when archiving material related to human rights violations, it is highly advisable that, at this point, before the content is ingested, we make an additional review of the material with respect to privacy, sensitivity, confidentiality, and copyrights. | ||

| Line 466: | Line 466: | ||

Conveniently, there are standards and software that have been developed to provide assistance in that process. | Conveniently, there are standards and software that have been developed to provide assistance in that process. | ||

=== Standards === | |||

Standards for metadata selection, collection, and use often include a full range of preservation metadata. Application of such metadata standards supports the preservation of digital items and ensures their long-term usability. A range of standards has been developed for handling preservation and metadata in general. As such a wide choice of options can often limit a clear view, we recommend an organization use as a starting point the “Preservation Metadata Implementation Strategies” (PREMIS) standard. | Standards for metadata selection, collection, and use often include a full range of preservation metadata. Application of such metadata standards supports the preservation of digital items and ensures their long-term usability. A range of standards has been developed for handling preservation and metadata in general. As such a wide choice of options can often limit a clear view, we recommend an organization use as a starting point the “Preservation Metadata Implementation Strategies” (PREMIS) standard. | ||

| Line 478: | Line 478: | ||

It should be noted that different metadata standards will often be integrated, or at least compatible, with the software we use for metadata collection and management functions. | It should be noted that different metadata standards will often be integrated, or at least compatible, with the software we use for metadata collection and management functions. | ||

=== Software Tools === | |||

Thus far in this chapter we have mentioned examples of different software solutions that can perform specific preservation metadata collection and management functions, such as file identification, conversion, validity, and fixity checks. Such tools will indeed sometimes be designed to perform just one specific, or a group of similar, functions. However, these individual tools are also often used together as a more wide-ranging software solution, which can provide a full scope of preservation and metadata-related functions. Moreover, such multifunctional tools for metadata are then incorporated into comprehensive software solutions that can manage the entire process of digital archiving within a given Digital Archiving System. | Thus far in this chapter we have mentioned examples of different software solutions that can perform specific preservation metadata collection and management functions, such as file identification, conversion, validity, and fixity checks. Such tools will indeed sometimes be designed to perform just one specific, or a group of similar, functions. However, these individual tools are also often used together as a more wide-ranging software solution, which can provide a full scope of preservation and metadata-related functions. Moreover, such multifunctional tools for metadata are then incorporated into comprehensive software solutions that can manage the entire process of digital archiving within a given Digital Archiving System. | ||

| Line 497: | Line 497: | ||

=== Preparing the Digital Archiving System === | |||

Set-up and preparation of our digital archival system for its first ingest of digital files is a complex process that requires time, effort, patience, and reasonably advanced IT knowledge and skills. | Set-up and preparation of our digital archival system for its first ingest of digital files is a complex process that requires time, effort, patience, and reasonably advanced IT knowledge and skills. | ||

Revision as of 12:48, 16 January 2024

Introduction

Now that we have completed the planning and organizing stage and come out the other side safely armed with the General Plan, the table of the archive’s structure, descriptions of the material, and a decision on software and storage media for the Digital Archiving System, we are prepared for the next stage. This is where the actual magic happens: the creation of our digital archive.

Along with the great promise it brings, this stage is also the most dynamic and complex, as well as the most resource-heavy, expertise-driven, and technologically demanding for the organization.

Our goal at this stage is to process and prepare all selected material—both physical and born-digital—and to make it digital preservation-ready. This means that by the end of this stage, we will have the material prepared with respect to all necessary technical and archival requirements for transfer into our newly selected Digital Archiving System. This includes a series of actions using software and other technological tools that need to be applied to our selected source material to be able to properly archive it and preserve it long-term.

Additionally, if we are working to digitally preserve source material that is wholly or partially physical, this stage includes a major pre-step: digitization.

Digitization

Through the process of digitization, we create digital copies, or “surrogates,” of original physical items. These digital copies are then processed as digital archival objects, preserved, and made accessible. We will, therefore, be focusing on the preservation of these digital copies rather than the original physical items. Consult Addendum II for further guidance.

There are different types of physical objects we might want to digitize that can be stored on a variety of media. They include, for example, text, photographs, drawings, maps, video, audio, and other types of content stored on paper, audio cassettes, 16 mm tape, or any other physical or analog storage media. They could also include objects such as pieces of clothing, banners, personal belongings, etc.

Clearly, the type of material we need to digitize will define both major and specific decisions to be made in the process—and each organization will make them in line with its goals and capacities. However, general elements of the process also need to be addressed in all digitization projects. This chapter outlines those elements of digitization that are relevant to the process regardless of the material's type, content, or storage media.

| BREAKING News: In-House Digitization May Cost More Than Outsourcing. |

|---|

| If the organization's capabilities are insufficient for the requirements of the digitization process, a decision to hire an external company for the project must be considered. Doing so may determine the success or failure of the program. Initiating digitization with inadequate preparation, resources, and capacities could produce more costs than results, with little or no long-term value. On the other hand, a quality-assured, well-planned, and executed outsourcing option could save substantial time and effort. Hence, in-house digitization, with the different costs it involves, may sometimes cost the organization more than outsourcing the work externally. |

Digitization is a major, demanding archival project in and of itself and requires due attention, careful planning, and dedicated implementation. Since we are looking at digitization as part of a larger process of building a digital archive, we have already discussed some of the issues involved, mostly regarding the first few stages of the process. An overview of the digitization process is outlined in Figures 9a and 9b.

| 1. Planning

conditions, space, naming, equipment, metadata.

quality, format, file naming, equipment & metadata.

items. |

2. Preparing Material

for digitization.

|

|---|

3. Preparing Data/Tech

naming, format selection, standard of quality, collection of metadata.

equipment, software, storage media.

requirements, testing, fine-tuning. |

4. Implementation

|

|---|

In previous chapters, we discussed the development of a General Plan, the creation of an inventory, and the selection and description of the material—which are also the first steps of the digitization process. Hence, having already covered the first two, we can pick up the digitization process at the beginning of the third stage by preparing archival and technological elements.

| BREAKING News: Digitization Can Be Done on a Small Scale and With a Modest Budget. |

|---|

| Small-scale digitization projects need to be adjusted to fit modest capacities and resources. Generally, that means there may be only one or two persons tasked with performing all the steps of the digitization process on one computer and with limited resources. The process is certainly less efficient, less reliable, and slower under those conditions, but it is doable and—whenever other options are not available—it is highly recommendable. Any digitizing work you can conduct can be highly significant, especially if the material is fragile and prone to deterioration. |

Specifying a Naming Convention for Digitized Files

For a digital file intended for archiving and preservation, a name is not just a name. It is also a very important descriptor of that particular item, which should contain information that allows us to identify what the item is and what it contains so we can locate it in the archive and properly manage and preserve it. Therefore, an important element of specifications for the process of digitization is development and application of a consistent set of rules, a so-called “naming convention” for digital surrogates we create from physical items.

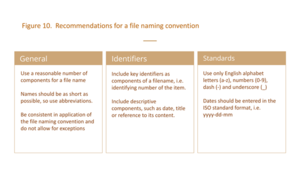

There are no universal rules for file naming, and each organization needs to develop its own naming convention that best suits its archival needs. However, the name of a digital surrogate should always provide a reference, a connection between itself and the physical item from which it was created through digitization. In principle, a file name should contain several components that identify it, for example its unique identifying number, or its date of creation, or a reference to its content, or series, or subseries, or folder it is a part of.

We should also bear in mind that these file names primarily need to be processed and understood by software we will use for managing our digital archive. Hence, our primary concern in naming files is to apply a convention that will enable our Digital Archiving System to correctly identify the file and use information contained within it. However, many consider it a good practice to also include a descriptive component in a file name, one that could be understood by humans as well, for example a reference to its title or content.

While, as mentioned, there are no strict instructions for developing a naming convention, we can nevertheless identify some basic recommendations, as outlined in Figure 10.

Specifying File Formats and Quality

In addition to the file name of a digital surrogate, its digital format and the standard of quality to which it will be digitized also need to be specified before the process can begin in earnest.

Given that the same type of files—such as documents, photographs, or video—can be stored in different digital formats, we need to specify which formats we will be using for the digital surrogates created from our physical items.

Given that we are digitizing material with the purpose of long-term preservation, it is important that we select formats that will allow their proper viewing and use in the future by new generations of software. To prevent our digitized files from becoming obsolete, we should choose formats that are robust and resilient to change over time.

This means we should be looking for formats that meet the necessary standards, which are well-established and widely used with substantial and positive user feedback. The formats we select should also allow us to add information and metadata to the files and should have stable support, either commercially or through an open source community.

Clearly, we will be considering different sets of formats depending on the type of items we are digitizing—documents, photographs, video, etc. The scope of format options can be overwhelming and there is no universally ideal solution for each type of digitized content. The selection, again, depends on specific needs and circumstances of the archive. Nevertheless, there are formats that have a proven high level of robustness and resilience to change. Figure 11 provides an overview of such formats for the most frequently digitized types of physical items: documents, pictures, audio, and video.

Figure 11. Overview of robust digital formats for digitization of different types of physical items

| Physical Item Type | Robust digital File format |

| Documents | |

| Photographs | RAW or TIF |

| Slides and negatives | RAW or TIF |

| Audio | WAV |

| Video | MP4 |

Specifying Quality Standard(s) for Digitized Files

An important element of the specifications for the digitization process is the standard of quality to which we want and need to digitize our physical items. This is usually referred to as the “resolution” of a digitized document, photograph, or video. A higher resolution of a digital surrogate will allow for a better user experience and wider possibilities for its use—and overall a better copy of its original than a lower-resolution file. However, higher resolution also means that the digital surrogate will have a bigger digital size and will therefore take up more space in our storage media.

Therefore, in specifying the resolution of the digital surrogates we will create, we need to weigh the requirements for their quality standard with the demand it creates in terms of digital storage space for our archive.

As human rights organizations working with unique and invaluable material, we can easily be tempted to digitize all our material in the highest available resolution to ensure the best possible quality of the digital surrogates. However, this would be neither feasible nor sustainable, as it would create immense difficulties in not only storing, but also processing and preserving such files long-term. Organizations therefore need to make digitization quality specifications in line with their goals and capacities. As a guidance, Table 12 provides an overview of what is often considered to be minimal and optimal resolution quality levels for digitization of different types of physical items.

Figure 12. Overview of minimal and optimal resolution quality levels for digitization of different types of physical items

| Item Type | Minimal Quality | Optimal Quality |

| Documents | 300 DPI | 600 DPI |

| Photographs | 600 DPI | 1,200+ DPI |

| Slides and negatives | 1,200 DPI | 2,400+ DPI |

| Audio | 16-bits and 44.1 KHz | 24-bits and 96 KHz |

| video | 1080P or 2 Megapixel | 2K+ or 4 Megapixel |

Metadata: Descriptions of Digitized Files

In the section dealing with the planning and organization of a digital archive, we discussed the important process of describing the archival material on several of its relevant attributes and creating a connection between those descriptions and the material by recording them in a table. This is necessary, as it allows us to later search for, locate, and identify items and item groups based on those descriptions, as well as to properly manage, preserve, and use the archival material. The same principle applies for the digital surrogates.

After digitization, it is the digital files we create from the physical originals that will become the items in our digital archive. Hence, they also need to be described and have their descriptions attached to them so they can later be found, accessed, and preserved.

These linked descriptions of archival items are known as “metadata,” or data about data.

In the process of digitization, it is essential that relevant metadata is collected and attached to the digital surrogates we create. This is because, without its attached metadata, a digital surrogate becomes meaningless and unusable—as we might be unable to find or identify it or understand what it is, its context, history, creator, or where it belongs in the archive.

Most of the metadata we need to preserve is linked to the digital archival files they describe and created and captured through software tools we use to digitize, manage, and archive the data. This includes basic metadata (e.g., date of creation/digitization) as well as very technical types of metadata, such as those on validity or integrity of digital files. The software tools can therefore allow us to capture the metadata. Concrete technical solutions in relation to different types of metadata being captured and preserved are discussed further in the manual. However, our main concern here is to select which types of metadata we want and need to record and preserve in our digital archival files.

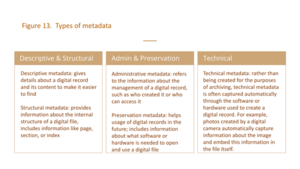

Compared with physical originals, digital surrogates require, and allow for, a whole range of additional metadata to be collected. This includes metadata such as technical specifications of an archival digital file, as well as information about its creation and any further digital action taken on it. For CSOs working with human rights material, such technical metadata is important not only for preservation but also for maintaining credibility of a digital surrogate and establishing the chain of custody.

There is a wide variety of types of metadata that could be collected about digital surrogates both during and after the digitization process. The most common types, based on their purpose and function, are summarized in Figure 13.

Selecting the metadata for any given digitization project will depend on its context and circumstances: an organization’s resources and capacities, the type of material, intended applications of it, types of access and user needs, among others.

Existing metadata standards and specific, tested, and widely used metadata profiles and sets provide guidance through the maze of numerous metadata types and formats. However, there are now so many different metadata standards and sets developed and proposed by different organizations that their sheer number by itself creates an obstacle to identifying those we want and need to use.

A good place to start is with the so-called “Dublin Core Metadata Element Set.” Dublin Core is a very widely applied set of 15 properties or elements for describing digital files. These elements are often considered to be a standard set of metadata that are applied almost regardless of the type of archival material, theme of the archive, or type of software used in the Digital Archiving System. Further, for preservation purposes, the so called PREMIS metadata standard provides a useful reference and guidance (PREMIS: Preservation Metadata Maintenance Activity (Library of Congress)).

Whatever set of metadata we select for our collection, there is another set of decisions that we need to make about them to complete their digitization specifications. These include questions such as, Where will the metadata be stored? How will it be captured? When in the process do we capture it?

Making considered decisions related to these questions in advance of the digitization process will provide us with a plan for standardized and consistent collection and structuring of metadata throughout the digitization process. This is important in order to make our metadata “interoperable”—which means making it structured and formatted in a way that allows it to be read and used by different computer systems.

Making our metadata interoperable will save us significant time and resources (as well as headaches) later in the process, not least in the next step when we need to ingest and make operable that metadata, along with the digital surrogate files to which it is linked, in our Digital Archiving System. These issues related to the processing of digital files and their metadata will be discussed in more detail in the upcoming section, where we look at how our entire material—both digitized and born-digital—needs to be prepared for ingest into our Digital Archiving System.

Selection, Set-Up and Testing of Equipment: Software, Hardware, and Storage Media

This manual cannot provide recommendations on specific digitization equipment, software, or storage media, or how it should be set up and optimized. Such advice would necessarily be too generic for requirements of any concrete project, and it would also be likely to quickly become obsolete.

However, we should mention three elements that need to guide our decisions in selecting the technology we use for digitization: characteristics of the material, an organization’s capacities and resources, and the archive’s needs and requirements.

First, the equipment we select and how it will be set up and fine-tuned depends on the material we are digitizing: type, format, state of preservation, size/length of the originals, and quantity. Fragile material, for example, will require more refined and sensitive equipment and set-up, while large quantities of material will urge a solution that provides for quick processing.

Further, our decisions will be dictated by the resources we have in terms of time, expertise, staff, space, and finances. Each of these aspects will set limits on what can be a feasible solution for our project.

BREAKING News: More Expensive Equipment Can Bring Down Overall Digitization Costs

We should be mindful that although digitization can be done on a different range of budgets, it is important to look at total costs of a project rather than one-off costs separately, such as the cost of a piece of equipment. Total project costs should include staff wages, equipment, time, etc. More expensive equipment that processes items more quickly, for example, could save us much more than it costs if we also calculate staff time and wages.

Finally, and most importantly, the needs of our archive and its future users as well as the modes of planned use for the materials we are digitizing should define the minimal and optimal requirements of the equipment.

,For hardware and software, regardless of the type of material (documents, photographs, video, or other), the requirement will be to provide digital surrogates of desired quality in adequate formats and capture the selected metadata. In terms of storage media, the most important aspects to be considered are its reliability (resilience to data loss), durability (usability over a longer time period), and scalability (potential to expand the data storage space as required).

Once we have selected and obtained our equipment, we need to properly install and set it up in line with our digitization requirements. This process is important and needs to be done properly, otherwise even the right equipment will not yield required results. Hence, if an organization does not have internal expertise, external assistance would be advisable at this point.

This especially true given that the set-up and its fine-tuning are not a one-off activity, as the process requires repeated testing and iterative changes before the required result is achieved. The testing process should include a sample of different groups of materials and involve the entire process of an item’s digitization (i.e., the digitization workflow).

Implementation: Digitization Workflow

The final stage of digitization is implementation of all the different elements that we have been planning, deciding on, and devising in the previous stages. Digitization is a complex process, but if all of its parts and functions are planned and designed well and in advance, its implementation will be streamlined and fruitful.

That is why in putting all elements together we should develop a detailed digitization workflow, which should include all its actions and operations—starting with review and preparation of physical items and workspace to the completion of the workflow through storing the created digital surrogates and making backup copies.

Each digitization project will have its own unique workflow and specific sequence of digitization actions and operations. Further, some activities, such as quality control, will be repeated at different stages of the process, while others will be executed simultaneously or in parallel. Although specific actions and their sequence are tailored to each concrete project, we can identify the key elements required in any digitization workflow: preparations, process scheduling, digitization, quality control, post-processing, and storage and backup.

Preparation of Material, Protocols, and Workspace

The digitization process begins in earnest with ensuring a clean and appropriate workspace, allowing enough area for work with physical materials as well as for the digitizing equipment and a computer. Assuming that fragile or otherwise compromised material has already been removed, we can proceed to cleaning our physical material and removing any added items, such as paper clips or staples on documents.

Information and relevant digitization specifications about file naming, file resolution, and format, plus any metadata to be recorded should be on hand and well-organized.

Process Scheduling

As part of the workflow, it is essential to clearly schedule the entire process—that is, to determine, document, and then strictly apply an exact sequence of operations to be performed during the digitization process. The scheduling should include buffer time for unexpected events.

Resource alert! Excellent examples of digitization workflows and scheduling for organizations dealing with the preservation of cultural heritage material are provided in “Technical Guidelines for Digitizing Cultural Heritage Materials,” issued by the USA Federal Agencies Digital Guidelines Initiative.

Digitization Processing

The process of digitization itself will clearly be very different depending on the type, volume, content, and other characteristics of the material. Paper documents and photographs can be scanned reasonably quickly, while analogue audio and video will need to be digitized in real time. Artwork and historical documents will require a different scanning specifications set-up than will an administrative document.

Regardless of the differences, a good practice at the start of each digitization session is to digitize a reference item (document, photograph, short sample audio or video) with the result reviewed against specifications as a form of ad hoc quality control. In case of any discrepancy from the digitization specifications, equipment can be checked and its set-up fine-tuned. This will help avoid wasting entire sessions of work due to equipment or set-up issues.

Post-processing

Post-digitization processing of digital surrogates includes making slight corrections to a file to adjust it to a certain standard or specific project specification. This could include actions such as increasing the sharpness of sound in a video file or brightness of an image on a document.

Post-processing might sometimes also include creation of secondary, derivative copies of the file. These are created for specific purposes such as providing access or producing high-quality reproductions, and also for creating fully searchable documents from originally non-searchable image files through the application of Optical Text Recognition (OCR) software. In essence, by running OCR software on our scanned image of a document, we add a layer of text onto that image file so other software can read it, which makes the document fully searchable. This is essential for making human rights archives more accessible and visible, which is often a key purpose of their digitization. Given the importance of the application of OCR technology in creating fully searchable text files from our digital surrogate image files, in Addendum IV we provide a set of recommendations regarding its use.

Quality Review

There are two elements to digitization quality control, and both can and should be implemented at multiple points in the process scheduling (i.e., both during and after digitization, as well as at regular intervals over the course of the project). The first element relates to ensuring that all physical items intended for digitization have indeed been digitized. This can be done automatically by comparing the two sets of data for physical items and their surrogates; however, this should also be accompanied by a sample manual check to ensure that digital surrogates properly correspond to their physical originals.

The second element of quality review is ensuring that the digitization specifications have all been met—that the digital surrogates are created in the right format and quality, with correct filenames, and selected metadata has been captured. Here again we will need to use a combination of manual and automated quality review, which is supported by software tools and applications such as "JHOVE.”

Storing Digitization Products

At the end of the process, we need to temporarily store the products of digitization on one or more storage media until they are prepared and ingested into a digital archival system. The end result of the process should be one or more digital surrogates of the original, which are often referred to as “master files.” These are stored in a file directory structure created for this purpose.

Master files are the best-quality files we produce through digitization and are intended to be preserved long term without loss of any essential features. The number of master files we will create will depend on the content of the originals and the planned uses of the digital surrogate.

In addition to master files, we can also produce a number of secondary files, often called “access” or “service files.” These files are created from the master file and optimized for the intended use (e.g., for web or for research).

For organizations working with documentation on human rights abuses, it is especially important to note that these derivative files are used for the creation of files with fully searchable textual content through OCR. The usual practice is for only master files to be stored for preservation purposes. However, given the importance of the OCR—and therefore fully searchable versions of documents—for human rights archives, it is advisable to also create and store two such readable files, one as an access copy and the other for preservation purposes. The same applies for the master files, as we should create at least two backup copies and store them on two separate storage media whenever possible.

Preservation and Preparation for Ingest

We are now fully in the digital archival world.

All our material is now in a digital form.

We also have a digital archival repository—in the form of a Digital Archiving System.

To complete the process of creating a digital archive, we now need to employ a set of software-based digital archiving techniques on both our digitized and born-digital material. This is necessary to prepare it for ingest and long-term preservation in the Digital Archiving System. We also need to set up and prepare our Digital Archiving System itself—its databases and software tools and applications—to properly receive, store, and preserve our digital archival material.

To do that, we first need to review our basic archiving tools—the archival structure table and descriptions of material—which in this digital archiving world will take the form of databases and text files containing file directories, metadata, and data documentation. Therefore, it is necessary to clarify these two key concepts that are uniquely important for digital archiving—metadata and data documentation—which are necessary for understanding how our digital archival content is organized, described, related, managed, and used within a Digital Archiving System.

What Is Metadata and Data Documentation?

Metadata is data—information about data, about the digital archival content. It is stored in a structured form suitable for software processing. Metadata is essentially equal to archival descriptions of digital content. Indeed, the descriptions of our content that we made in the previous stage will now, in the Digital Archiving System, become metadata, thereby adding to other types of metadata such as system-generated technical metadata or metadata on an item’s access history. Metadata is therefore necessary for the goals of long-term preservation and access, as it allows us to maintain the integrity, quality, and usability of content.

Data documentation provides information about the context of our data, our digital archival content. It is often provided in a textual or other human-readable form. Data documentation in fact supplements metadata and provides information that enables others to use the archival content. For example, if we conduct a survey of victims and are preserving their filled-in questionnaires as our digital archival data, we should also preserve related data documentation (e.g., a document detailing the survey design and methodology). Given that data documentation is also “data about data,” it could also be seen as a specific type of metadata, one which provides context and is recorded in human-friendly format.

Preparing Metadata and Data Documentation

While our digital files are safely stored and backed up on storage media awaiting ingest and archiving in the digital information system, we need to turn our attention to some housekeeping duties. They involve preparing our metadata and data documentation for the upcoming process to ensure the smooth ingest and proper archiving of files.

This involves having a clear and well-organized record of data documentation and metadata thus far in the process—what they contain and how they relate to one another. This includes tables/databases with lists (or directories) of file names, the files’ metadata, and data documentation. Throughout previous chapters, we described how these documents are developed or generated through planning, inventory creation, review, selection, organization, description, and digitization of material. As a result, at this point in the process, we should have the following metadata and data documentation created:

a) This document started its life as Identification Inventory and then, through processes of organization and description, grew into the Table of Archive’s Structure. It contains metadata on the archive’s structure, grouping of files in series, subseries, and folders, and additional descriptive and technical metadata we selected to put into it.

b) As a result of the digitization process, we have produced databases in which we recorded each digital surrogate we produced and the selected metadata about it.

Further, digitizing equipment and software also generated additional databases with metadata we selected to capture technical attributes of the digital surrogates and/or history of actions on them throughout the digitization process.

Finally, we also might have produced text documents containing data documentation, information about the context of the digital surrogates we created, or the digitization process itself. This will allow others to understand how our data can be interpreted or used.

c) A database of born-digital files for preservation with their basic metadata will either already exist or be easily created using simple software tools such as “DROID” or “IngestList.”

d) There might be additional pre-existing tables/databases or text files containing metadata and/or data documentation about certain item groups or the entire collection.

In order for our digital content, metadata, and data documentation to be properly ingested into the Digital Archiving System, we need to provide the system software with instructions on what these documents are and how they relate to each other. In this way, the system can, for example, correctly attach metadata in one database to the items metadata describes that are listed in a different database, and then to data documentation providing information about the given items’ context.

As part of the preparations, we might also need to manually divide, merge, or combine some of our tables/databases to transform them into a more appropriate format.

The exact steps that we will need to take in this process in which we will need to prepare our metadata and data documentation, or how we will input information about their inter-relations into the Digital Archiving System, will depend on the characteristics of the archive and the system itself.

Yet, regardless of these specifics, we will always need to have a clear overview, a map, or a scheme of our metadata and data documentation and how they are related before we can begin with the ingest.

Preservation and Preparation of Data for Archiving

We can now move on to the preservation actions and preparation of our digital data for ingest and archiving.

Cleaning

The first thing we should always do before working with digital data intended for preservation is perform an antivirus scan by connecting the storage media to a previously scanned computer that is not connected to any local network or internet.

Backup

Then comes the backup. At the end of the digitization process, we already created backups of digital surrogates’ master files. If we have not yet done the same for the born-digital data, we should create their backups now by producing two copies and storing them on separate storage media, if possible at two different locations.

File Naming

While our digital surrogates’ files have already been named in line with the naming convention we developed and adopted, our born-digital files might still have their original names. We must therefore apply our naming convention to the born-digital files and name them accordingly. Their names will then contain the same components—identification, description, technical, or other—as those we selected and used for the digital surrogates in a way it was described in the digitization chapter. There are reasonably simple and easy-to-use software tools that can perform this task of renaming our digital files automatically within the parameters we set for it, such as “Rename Master” and “File Renamer Basic.”

Metadata

In the previous section, we took stock of metadata and data documentation we collected thus far in the process. As explained there, we will need to ingest our metadata in a specific, fixed format that is recognizable by our Digital Archiving System. This specific format of metadata will be based on the metadata standard we selected to implement earlier in the process, and that we now need to apply for ingest of data into our Digital Archiving System.

If, as advised in this manual, in the planning phase, we have already made a decision on the standard we will apply for metadata collection and implemented it through description and digitization phases, then our metadata will already have been gathered in accordance with that standard. Therefore, we should be able to arrange and prepare it for ingest in accordance with the system-recognizable format by making only basic technical arrangements or mapping our metadata to the standard. For example, in the digitization section, we mentioned that the so-called “Dublin Core” basic metadata standard is supported by most digital archiving software. Hence, if we applied this standard for the collection of metadata from the beginning, and we selected the software that supports it, we would now be able to translate the collected metadata into the format our Digital Archiving System can recognize and properly ingest.

Preservation of Metadata

In the earlier discussion of metadata and the importance of its proper collection and management, we mentioned the key role it has for long-term preservation of digital archival data.

This becomes even more salient and relevant at this point in the process, with the preparation for ingest and long-term preservation of our material. This is because, before we ingest and archive our data, we need to make sure that we capture the necessary metadata, which will allow our digital material to be adequately preserved, its authenticity maintained, and it remaining usable in the future. To understand which essential set of metadata we need to capture to preserve our invaluable data, we will need to get to know our digital files and their formats a bit better, including things such as our files’ validity, quality, and fixity.

Identifying and Converting File Formats

Back in the digitization process, we established the need to store our digital material in file formats that are appropriate for long-term preservation. Primarily, these are formats that have a wide user/support community and are proven to be resilient to change over time. This is also why they are often called “lossless” as opposed to “lossy” formats that do tend to lose quality and/or change and degrade over time.

Our digitized material has already been stored in appropriate preservation formats through digitization, and now we need to make sure the same is true with our born-digital material.

We first need to identify the format of our born-digital files, which we can do with the assistance of specialized software, such as “DROID” or “Siegfried,” that allows us to automatically identify the format of batches of our digital files. We will then proceed to change formats of the files for which we determine the need to be put into a different, preservation-appropriate format. Specialized software for conversion of files to different formats can be very useful in this process. Such software is format-specific (e.g., “Audio/Video to WAV Converter”) which converts audio and video files to WAV format, or “CDS Convert,” which allows conversion of documents, presentations, and images between different software formats.

Validating Files

The next step in preparing our digital content for proper preservation in the Digital Archiving System is validation of our files—that is, establishing that they really are what we think they are.

In essence, through file validation, we check whether the format of a file is proper and correct—whether it is valid. Hence, through file format validation, we can check whether a file conforms to the file format specification—standards a specific file format such as .jpg, .doc., or TIFF must follow. As an illustration, file format validation could be compared to the inspection of boxes or folders in a physical archive to ensure they are not damaged, otherwise items could fall out or be damaged.

In digital archiving, file format validation is particularly important for long-term preservation and access, for a number of reasons. Files with formats that are not valid are difficult to manage over time, especially when a file needs to be converted or migrated. Moreover, access might become difficult or impossible, as files with nonconforming formats become more difficult to open and use over time. Finally, files that are not valid will be more difficult—if not impossible—to render properly by future software.

Of course, we do not manually inspect whether a file format conforms to its specifications; there is software available to perform that function and identify and create reports on the files that are found not to be valid. We already mentioned one such software tool—JHOVE—in the chapter on quality control at the end of the digitization process, but there are also other tools, most of which are specialized for a certain group of formats.

Fixity

Fixity, a crucial element of the long-term preservation of files as well as in maintaining their integrity, authenticity, and usability, means a state of being unchanged or permanent. In essence, fixity allows us to determine whether a file has been altered or corrupted over time and to track and record any such changes.

To be able to do this, we use fixity to record the initial state of a file before ingest by taking its “digital fingerprint.” In fact, fixity software will record a number of a file’s specific, technical characteristics and create an alphanumeric code—a “checksum.” This checksum, just like fingerprints for humans, will be unique for that file and should not change over time. The checksum for a file will be recorded as part of its metadata so we can always perform the same fixity check and establish whether the file’s checksum has changed—that is, whether a file has changed. Recording this type of preservation metadata is crucial for confirming and establishing a digital item's "chain of custody.”

In addition to allowing us to establish any changes to a file that have occurred over time, fixity is also useful when we are migrating files between different storage media, units, or digital depositories. It is highly advisable to apply a fixity check after each such file transfer to establish any changes that might have occurred in the course of the file migration.

Further, fixity will allow us to verify that any copies of a file we create for backup are complete and correct. Fixity checksum can also be given to other potential file users so they are able to verify that they have received the correct file. There is a range of software that can perform fixity, such as “Checksum” and “Exact.File,” just to name a few.

Quality Control

Many things can go wrong with digital files as they are created, managed, and stored before they reach the point of ingest. During digitization, due to an error or a virus, files can be damaged, made incomplete, or reduced in quality. It is therefore a good practice to perform as comprehensive a quality check of all our digital files as possible before their ingest and archiving. There is a whole set of tools that perform either specific or a group of quality control actions. Some examples include NARA’s File Analyzer and Metadata Harvester, which has a range of functions, or, on the other side of the spectrum, a highly designed “Fingerdet,” which helps detect fingerprints on digitized items.

Removing Duplicates and Weeding Files

While we are at it, we should use this opportunity to clean up our files a bit. Over the course of collecting, organizing, copying, and temporarily storing our digital files, it is likely that we will have created duplicates, or that folders contain hidden files or files that do not belong in them. Having duplicates and other unwanted files in our collection can create confusion, in addition to unnecessarily taking up space in our storage. It is therefore a good practice to remove them before ingest. Depending on the size of the collection, this could be a very time-consuming and error-prone task if performed manually. Luckily, there are software tools that can do this for us efficiently and reliably. Examples of dedicated tools for this purpose include “FolderMatch” and “CloneSpy.”

Metadata on Private, Sensitive, Confidential, or Copyrighted Data

Given the importance of data safety and security when archiving material related to human rights violations, it is highly advisable that, at this point, before the content is ingested, we make an additional review of the material with respect to privacy, sensitivity, confidentiality, and copyrights.